Exploring Adversarial Training in AI Alignment

Balancing the Benefits and Risks of a Powerful AI Alignment Technique

In the constantly changing realm of artificial intelligence, a haunting concern occupies the thoughts of developers and engineers: the task of harmonising AI systems with human ethics and objectives. Amidst the myriad techniques designed to achieve this noble aim, one approach stands out, whispering promises of robustness and resilience in the face of adversity: adversarial training.

This curious method, much like a tale of two cities, offers both hope and trepidation. On one hand, it beckons with the allure of AI systems capable of anticipating and responding to potential threats or undesirable outcomes. Yet, on the other, it threatens to lead us down a path riddled with unforeseen consequences and newfound vulnerabilities. It is in this precarious balance between light and darkness that we find ourselves, poised to explore the intricacies of adversarial training in AI alignment.

Our journey shall encompass the highs and lows, the risks and rewards of this intriguing method. We shall delve into the mechanics of adversarial training, weigh the advantages it affords, and scrutinise the risks that lie within its shadows. Throughout this expedition, we will strive to maintain a comprehensive and nuanced understanding of this technique, for it is only through such measured examination that we may discern the path forward in the quest for AI alignment.

AI Alignment and Adversarial Training

This dynamic interplay between the AI and its adversaries serves to sharpen the AI system's skills and resilience, much like the forging of a blade in the flames of competition.

Amidst the vast expanse of artificial intelligence lies a curious and, at times, perplexing concept: AI alignment. This notion, as intricate as it is essential, encompasses the endeavour to ensure that the digital offspring of human ingenuity act in accordance with our values and aspirations. In a world where machines wield increasing influence over our lives, the importance of guiding their actions towards benevolent ends cannot be overstated. AI alignment represents the pursuit of bridging the gap between human ideals and artificial intellect, a task as monumental as it is delicate.

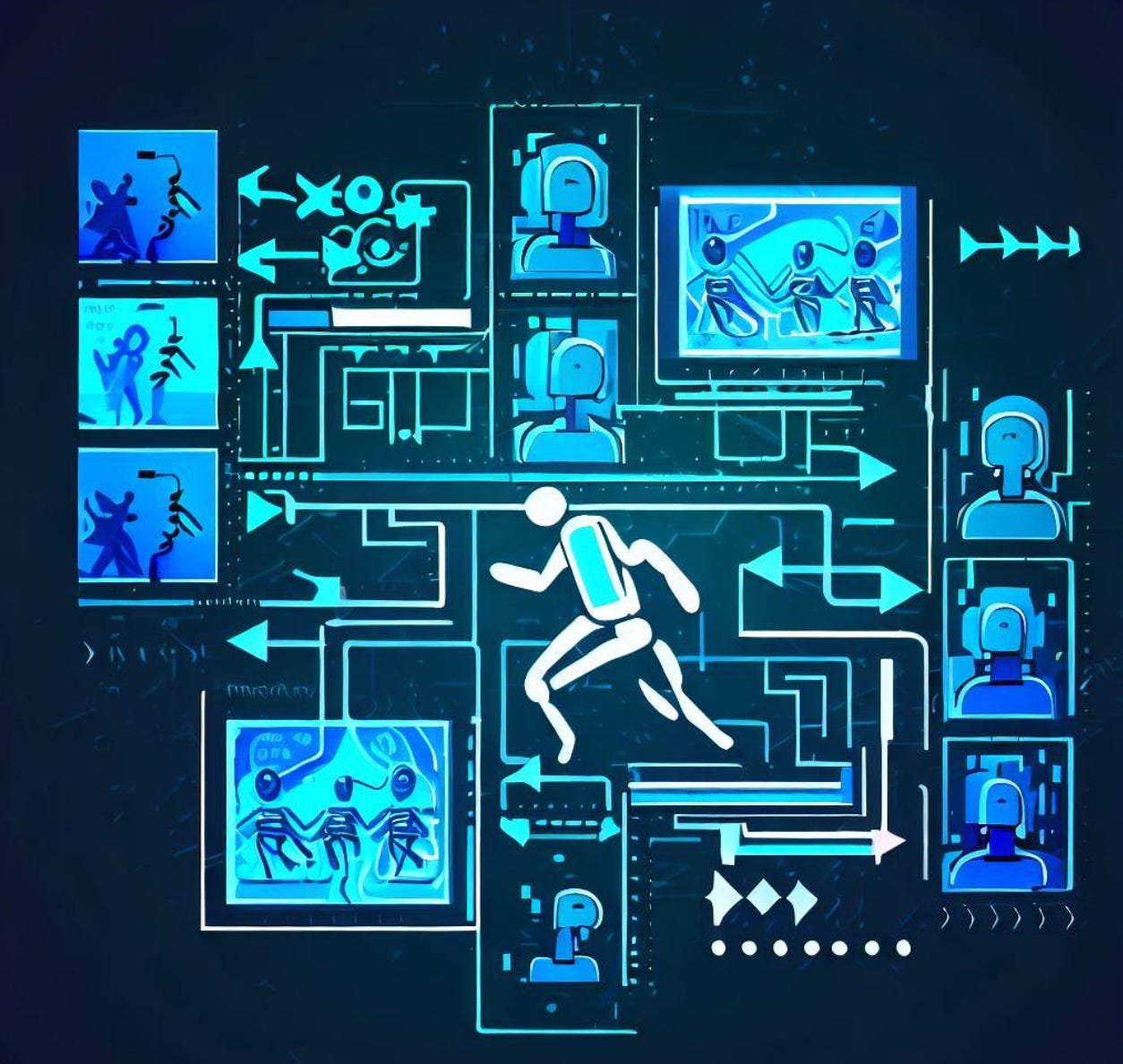

In the labyrinth of techniques designed to achieve AI alignment, adversarial training emerges as a noteworthy contender. This approach, much like the intricate interplay of predator and prey, seeks to strengthen AI systems by subjecting them to a continuous barrage of contrived challenges. By training these systems to anticipate and counter potential threats, adversarial training aims to cultivate a robustness that would allow AI to navigate the treacherous waters of uncertainty and upheaval.

The core principles of adversarial training are as fascinating as they are complex. At its heart, the technique revolves around the generation of adversarial examples, which are carefully crafted inputs designed to deceive the AI into making erroneous decisions. By exposing the AI system to a multitude of these deceptive stimuli, the aim is to bolster its defences and ultimately improve its ability to detect and deflect genuine threats.

Adversarial training is, in essence, a form of simulated combat where AI systems are pitted against adversarial forces in a controlled environment. This cerebral contest of wits allows the AI to experience the full spectrum of cunning strategies and potential pitfalls that it may encounter in the real world. During this demanding evaluation phase, reminiscent of a fiery initiation, the AI system's safeguards are sharpened, and its susceptibilities are uncovered, resulting in a perpetually increasing degree of robustness.

At the same time, the adversarial forces employed in this method are not static adversaries. They, too, evolve and adapt, employing increasingly sophisticated tactics in their attempt to outmanoeuvre the AI system. This dynamic interplay between the AI and its adversaries serves to sharpen the AI system's skills and resilience, much like the forging of a blade in the flames of competition.

Thus, adversarial training stands as a captivating and formidable method in the quest for AI alignment. It holds the potential to strengthen the digital minds we have crafted, ensuring that they can weather the storms of unpredictability and chaos. And yet, as we delve deeper into this fascinating technique, we must remain ever vigilant to the shadows it casts and the potential dangers that lurk within.

Delving into the Mechanics of Adversarial Training

By crafting inputs that are indistinguishable from genuine examples to the human eye, yet confounding to the AI system, the architects of these deceptive stimuli ensure that the AI remains perpetually on its toes

As we embark upon the exploration of adversarial training, it is crucial to first unravel the intricate machinery that powers this remarkable technique. Like the gears and cogs of a finely crafted timepiece, the various components of adversarial training work in tandem, harmoniously orchestrating the delicate interplay between AI systems and their adversaries.

At the very core of adversarial training lies the concept of adversarial examples. These carefully contrived inputs are designed to deceive the AI system into committing errors, luring it into a trap of falsehood. Devised with cunning precision, these examples seek to exploit the weaknesses within the AI's armour, exposing the chinks that might otherwise remain hidden from view.

The generation of these adversarial examples is an art in itself, a delicate balance between ingenuity and deception. By crafting inputs that are indistinguishable from genuine examples to the human eye, yet confounding to the AI system, the architects of these deceptive stimuli ensure that the AI remains perpetually on its toes, constantly vigilant against the ever-present threat of deceit.

In the world of adversarial training, the AI system is subjected to a relentless barrage of these carefully orchestrated attacks. Like a seasoned boxer honing his skills in the ring, the AI must learn to anticipate and counter the deceptive blows that rain down upon it. With each successful parry, the AI system's defences grow stronger, its resilience more pronounced.

The adversaries in this contest, however, do not remain stagnant, content with their initial efforts. Instead, they adapt and evolve, employing increasingly sophisticated tactics in their ceaseless quest to outwit their digital foe. This dynamic interplay between the AI and its adversaries serves to constantly test the system's mettle, forging a stronger, more resilient creation in the crucible of competition.

Thus, as we delve deeper into the mechanics of adversarial training, we begin to appreciate the intricate tapestry of challenge and growth that it weaves. The ceaseless duel between AI systems and their cunning adversaries lies at the very heart of this technique, a testament to the potential that adversarial training holds in the pursuit of AI alignment.

Weighing the Advantages: Adversarial Training for AI Alignment

While the benefits of adversarial training might shine like a guiding light in our quest for AI alignment, it is essential not to disregard the potential dangers and unforeseen ramifications that could arise from employing this method. Similar to the concealed hazards that dwell at the periphery of our perception, these dangers serve as a striking illustration of the complexities and uncertainties that permeate the domain of artificial intelligence.

One of the foremost advantages of adversarial training is the development of AI systems that are more robust and resilient in the face of potential threats or undesirable outcomes. Think of it like a football team constantly facing tough opponents during practice sessions. By continuously subjecting the AI to a gauntlet of adversarial examples, much like a team playing against challenging adversaries, the system learns to anticipate and counter these challenges, ultimately becoming more adept at handling unexpected situations. Just as a football team becomes stronger and more prepared for real matches, so too does the AI system develop its ability to manage unforeseen challenges.

As the adversaries adapt and grow more cunning in their tactics, the AI must learn to counter these increasingly sophisticated ploys, forging an ever more resilient creation

Moreover, adversarial training facilitates the identification and rectification of vulnerabilities within AI systems. Through the unyielding scrutiny of its adversaries, the AI is exposed to a plethora of potential threats, allowing its creators to address and eliminate the weaknesses that might otherwise remain concealed. In this manner, adversarial training serves as a crucible for refining and perfecting the digital minds we have crafted.

Additionally, the dynamic nature of adversarial training ensures that AI systems are prepared to face an ever-evolving array of challenges. As the adversaries adapt and grow more cunning in their tactics, the AI must learn to counter these increasingly sophisticated ploys, forging an ever more resilient creation. This continuous cycle of challenge and growth ensures that the AI remains perpetually vigilant, ready to confront the uncertainties of a rapidly shifting landscape.

Furthermore, adversarial training provides an invaluable opportunity for AI systems to experience the full spectrum of potential pitfalls and obstacles they may encounter in the real world. By simulating a controlled environment where the AI can confront these challenges without causing harm, adversarial training offers a safe and effective means of honing the system's skills and abilities, ensuring that it is better prepared to align with human values and intentions.

Uncovering the Risks and Unintended Consequences of Adversarial Training

This single-minded determination to withstand the barrage of adversarial examples may detract from the AI system's ability to effectively address a broader range of challenges

Although the benefits of adversarial training may seem like a guiding light in our quest for AI alignment, it is essential not to neglect the possible hazards and unforeseen ramifications that could accompany this approach. Comparable to the concealed threats lurking in our periphery, these dangers underscore the intricacies and ambiguities that permeate the sphere of artificial intelligence.

One of the most pressing concerns surrounding adversarial training is the possibility that it might inadvertently lead to new vulnerabilities within AI systems. As opponents become increasingly crafty and advanced in their efforts to outwit the AI, they might reveal hitherto undiscovered vulnerabilities, paving the way for unanticipated manipulations that could compromise the system's stability. In this manner, adversarial training may inadvertently become a double-edged sword, providing both protection and peril in equal measure.

Moreover, the relentless pursuit of resilience through adversarial training may also give rise to over-optimisation, resulting in AI systems that are excessively focused on countering specific threats. This single-minded determination to withstand the barrage of adversarial examples may detract from the AI system's ability to effectively address a broader range of challenges, leaving it ill-equipped to navigate the complexities of the real world.

Another potential pitfall of adversarial training lies in the ethical implications of subjecting AI systems to such a relentless and unyielding onslaught of deceptive stimuli. As our understanding of AI consciousness and autonomy continues to evolve, questions may arise regarding the ethical implications of adversarial training and the potential for causing harm or distress to these digital minds we have created.

In our endeavour to unveil the risks and unintended consequences of adversarial training, we find ourselves contemplating the delicate equilibrium between optimism and apprehension that marks the quest for AI alignment. It is solely through an all-encompassing and nuanced comprehension of the merits and perils of adversarial training that we may aspire to traverse the intricate and constantly evolving terrain of artificial intelligence, steering our digital progeny towards a future in harmony with our values and aspirations.

Mitigating Risks and Maximising Advantages

By carefully observing their performance and adaptation, we can identify instances of over-optimisation and take corrective measures to ensure a comprehensive range of skills and abilities are developed

As we tread the winding path towards AI alignment, it becomes imperative to weigh the trade-offs between the bountiful promises and inherent perils of adversarial training. Like the intricate interplay of light and shadow that accompanies the setting sun, we must delicately balance these opposing forces in our pursuit of harmony between human ideals and artificial intellect.

One strategy for achieving this equilibrium involves crafting adversarial examples that challenge AI systems without unduly exacerbating their vulnerabilities. By meticulously designing these deceptive stimuli, we can foster resilience in our creations while simultaneously mitigating the risk of exposing previously unknown weaknesses. In this manner, we can harness the strengths of adversarial training while remaining vigilant against the potential pitfalls that lie in wait.

Moreover, close monitoring of AI systems throughout the adversarial training process is essential to maintain this delicate balance. By carefully observing their performance and adaptation, we can identify instances of over-optimisation and take corrective measures to ensure a comprehensive range of skills and abilities are developed. This constant vigilance will empower us to guide our digital progeny towards a more robust and well-rounded understanding of the challenges that await them in the real world.

Additionally, an interdisciplinary approach is crucial to refining adversarial training methodologies. By drawing upon the expertise of professionals from diverse backgrounds, we can develop innovative solutions that consider the complexities of AI alignment from multiple perspectives. This collaborative effort can not only contribute to the refinement of adversarial training techniques but also enrich the broader discourse on AI alignment.

Furthermore, the importance of open communication and collaboration among researchers, developers, and stakeholders in the field of AI cannot be overstated. By cultivating an atmosphere of knowledge-sharing and mutual support, we can learn from both our triumphs and our shortcomings, continually enhancing the methods employed in adversarial training and AI alignment more broadly.

In essence, the pursuit of a balanced approach to adversarial training necessitates the careful consideration of the interplay between its potential rewards and inherent risks. By thoughtfully designing adversarial examples, maintaining vigilant monitoring, embracing interdisciplinary collaboration, and fostering an environment of openness, we can hope to mitigate the dangers and maximise the benefits of this intriguing technique, propelling our digital descendants towards a future in harmony with our values and aspirations.

Future Directions in Adversarial Training Research

By cultivating an environment of shared learning and reciprocal assistance, we can contribute to the progression of adversarial training approaches and hasten our pursuit of AI alignment

As we cast our gaze upon the horizon, we discern several key areas of research and development in the realm of adversarial training. These burgeoning fields hold the potential to reshape and refine our understanding of AI alignment, illuminating the path towards a more harmonious fusion of human values and artificial intellect.

One such area of exploration lies in the development of novel adversarial training techniques that could enhance the robustness and resilience of AI systems. By delving deeper into the complex interplay between adversaries and AI, we may uncover innovative strategies that can further improve the performance of our digital offspring in the face of adversity.

Another crucial avenue of research concerns the potential impact of emerging technologies and advancements on the future of adversarial training. The advent of quantum computing, for instance, could transform the landscape of AI alignment, unlocking new possibilities and challenges that we must carefully consider and navigate as we strive to harmonise human and artificial intellect.

Furthermore, as we navigate the intricate landscape of this domain, the significance of cooperation and knowledge exchange among researchers and practitioners in the field must not be diminished. By cultivating an environment of shared learning and reciprocal assistance, we can contribute to the progression of adversarial training approaches and hasten our pursuit of AI alignment.

The road ahead is undeniably laden with both promise and uncertainty, and it is only through our unwavering commitment to collaboration and open dialogue that we can hope to steer the course of adversarial training research towards a future that aligns with our values and aspirations.

A Thoughtful Reflection on Adversarial Training and AI Alignment

In the words we have woven throughout this exploration, we have endeavoured to shed light upon the intricate tapestry of adversarial training in AI alignment. We have delved into the mechanics of this fascinating technique, scrutinised its potential advantages and inherent risks, and contemplated the delicate balance required to mitigate dangers and maximise benefits.

The necessity for developing a thorough and well-rounded comprehension of adversarial training is of paramount importance. Only by engaging in such a balanced analysis can we traverse the constantly shifting terrain of artificial intelligence, guiding our digital progeny towards a future that resonates with our ideals and ambitions.

As we conclude our journey, we invite you, dear reader, to remain informed and engaged in the ongoing conversation surrounding AI alignment and adversarial training. For it is through our collective wisdom and vigilance that we can guide the future of artificial intelligence towards a harmonious and benevolent destiny.